Transform Your Business With AI Software Development Solutions https://www.globalcloudteam.com/ — be successful, be the first!

What Are Counterfactual Explanations In Ai?

It’s also essential that other forms of stakeholders better perceive a model’s decision. Facial recognition software used by some police departments has been identified to result in false arrests of innocent individuals. People of colour in search of loans to purchase homes or refinance have been overcharged by hundreds of thousands due to AI instruments utilized by lenders. And many employers use AI-enabled tools to display screen job applicants, many of which have proven https://www.globalcloudteam.com/explainable-ai-xai-benefits-and-use-cases/ to be biased in opposition to people with disabilities and different protected groups.

Shapley Additive Explanations (shap)

In 1972, the symbolic reasoning system MYCIN was developed to elucidate the reasoning for diagnostic-related functions, corresponding to treating blood infections. Explainable AI-based methods construct belief between military personnel and the techniques they use in combat and different applications. The Defense Advanced Research Projects Agency, or DARPA, is developing Digital Trust XAI in its third wave of AI techniques. No, ChatGPT isn’t considered an explainable AI as a result of it isn’t in a position to clarify how or why it supplies certain outputs.

What Is Lime (local Interpretable Model-agnostic Explanations)?

AI models predicting property costs and investment alternatives can use explainable AI to clarify the variables influencing these predictions, helping stakeholders make knowledgeable selections. AI-based learning systems use explainable AI to offer customized studying paths. Explainability helps educators understand how AI analyzes students’ performance and learning styles, allowing for extra tailored and efficient instructional experiences. To address stakeholder needs, the SEI is growing a rising body of XAI and responsible AI work. In a month-long, exploratory project titled “Survey of the State of the Art of Interactive XAI” from May 2021, I collected and labelled a corpus of 54 examples of open-source interactive AI instruments from academia and trade.

- The most typical strategy for reaching Explainable AI is to use methods like LIME, SHAP, and rule-based methods.

- This can lead to fashions that are nonetheless powerful, but with behavior that’s a lot easier to elucidate.

- A accountable artificial intelligence answer allows companies to engender confidence and scale AI safely by designing, developing, and deploying it with good intentions to empower employees, businesses, and society.

- Permutation importance is a method used to know the affect of various variables in the AI mannequin.

- Additionally, Large Language Models (LLMs) like GPT-4 comprise intricate inside representations that seize numerous elements of language and knowledge.

Managing Financial Processes And Stopping Algorithmic Bias

But if the variable isn’t as essential, randomizing its values will have a minimal effect. Permutation significance is a way used to know the influence of different variables in the AI mannequin. It works by permuting the values of a number of variables in the model to see how a lot its accuracy decreases.

Technical complexity drives the necessity for more sophisticated explainability methods. Traditional strategies of mannequin interpretation might fall quick when utilized to extremely complex techniques, necessitating the event of new approaches to explainable AI that may handle the increased intricacy. In a similar vein, whereas papers proposing new XAI techniques are ample, real-world guidance on how to choose, implement, and take a look at these explanations to help project needs is scarce. Explanations have been shown to improve understanding of ML systems for lots of audiences, however their ability to construct belief amongst non-AI consultants has been debated.

XAI performs a crucial function in guaranteeing accountability, fairness, and ethical use of AI in numerous functions. The many faces of XAI highlight the varied approaches wanted to make AI systems clear, accountable, and reliable. By using a mix of explainability, interpretability, feature significance, and different methods, we are in a position to gain a complete understanding of AI models. This understanding is important not only for bettering the models themselves but additionally for ensuring their protected and fair deployment in real-world purposes.

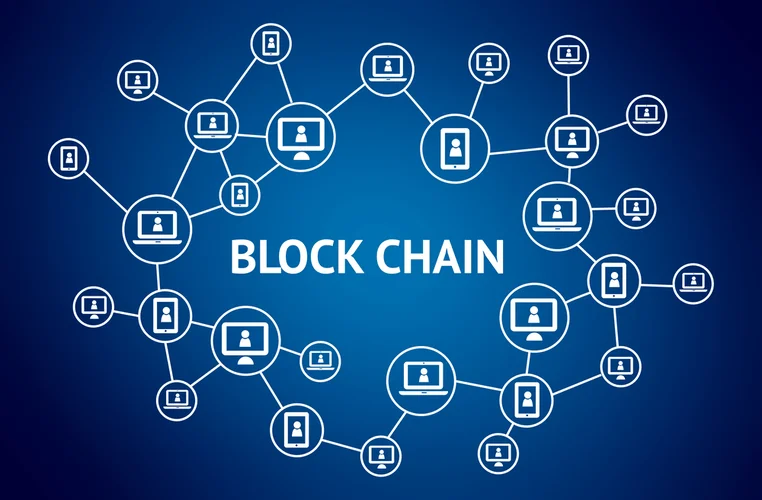

Explainable AI (XAI) is a set of instruments and frameworks that make artificial intelligence methods clear and comprehensible. It focuses on creating AI that not only delivers accurate results but in addition presents comprehensible explanations for their selections. However, the complexity of these fashions poses important challenges for explainability.

Department of Health and Human Services lists an effort to “promote moral, reliable AI use and improvement,” including explainable AI, as one of many focus areas of their AI technique. Another topic of debate is the worth of explainability compared to other methods for providing transparency. Although explainability for opaque models is in high demand, XAI practitioners run the chance of over-simplifying and/or misrepresenting sophisticated methods.

Even if the inputs and outputs have been recognized, the AI algorithms used to make selections were typically proprietary or weren’t simply understood. Generative AI describes an AI system that can generate new content like text, images, video or audio. Explainable AI refers to strategies or processes used to help make AI more understandable and transparent for customers. Explainable AI can be applied to generative AI methods to assist clarify the reasoning behind their generated outputs. Explainable AI makes artificial intelligence fashions extra manageable and comprehensible.

Furthermore, by providing the means to scrutinize the model’s choices, explainable AI allows exterior audits. Regulatory bodies or third-party specialists can assess the model’s equity, guaranteeing compliance with moral standards and anti-discrimination legal guidelines. This creates an additional layer of accountability, making it simpler for organizations to foster honest AI practices. Explainable AI is essential for ensuring security of autonomous vehicles and constructing consumer trust. An XAI model can analyze sensor data to make driving decisions, similar to when to brake, accelerate, or change lanes.

While any sort of AI system can be explainable when designed as such, GenAI typically isn’t. SBRLs assist explain a model’s predictions by combining pre-mined frequent patterns into a decision list generated by a Bayesian statistics algorithm. This list is composed of “if-then” guidelines, the place the antecedents are mined from the info set and the set of rules and their order are realized. Simplify the method of mannequin analysis while rising model transparency and traceability.

For example, contemplate the case of threat modeling for approving personal loans to prospects. Global explanations will inform the vital thing components driving credit danger across its whole portfolio and assist in regulatory compliance. XAI methods can contribute to develop accountability, fairness, transparency and avoid discriminatory bias in public administration, in addition to provide better acceptance and trust in AI techniques. AI algorithms used in cybersecurity to detect suspicious activities and potential threats must provide explanations for every alert.

It’s necessary to notice that the concept of XAI is still in its early stage, and there is ongoing research and development work within the area. Therefore, most of the best practices and strategies for Explainable AI are still in growth and evolving. For instance, let’s say that when an AI model “sees” a picture of a forest with some animals, it draws a circle around the animals. Feature visualization helps you “peek” inside the mannequin’s mind to determine the characteristics that activate particular neurons. Generative AI, exemplified by fashions like GPT-4 and DALL-E, has opened up new possibilities for creating realistic and creative content.

They thereby assist you to to determine whether to trust your mannequin — however they don’t truly make the original mannequin reliable. End users affected by AISam might wish to contest the AI’s choice, or examine that it was fair. End users have a authorized “right to explanation” under the EU’s GDPR and the Equal Credit Opportunity Act within the US. Domain experts & business analystsExplanations enable underwriters to verify the model’s assumptions, in addition to share their experience with the AI.

Traditional AI approaches, like deep studying neural networks, can be seen as ‘black boxes’ because it’s obscure how and why they make decisions. Explainable AI methods provide insights into AI methods, enabling people to grasp and validate the decision-making course of. This work laid the inspiration for lots of the explainable AI approaches and strategies which would possibly be used at present and supplied a framework for transparent and interpretable machine studying. In conclusion, explainable artificial intelligence (XAI) performs a pivotal function in enhancing the transparency, accountability, and trustworthiness of AI systems.